Among other important elements of programmatic SEO, having a high-quality dataset is critical. A considerable part of your content quality depends directly on the dataset you have.

But finding a high-quality dataset is difficult. There’s no fixed process for this, but you need the dataset anyway.

And in this post, I will explain how to find datasets for programmatic SEO projects, and how I do it for my projects. Let’s get into it…

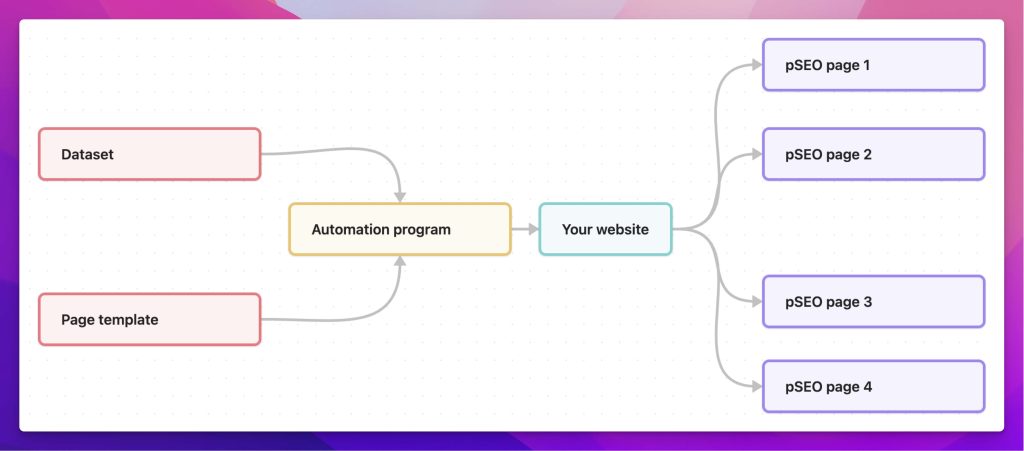

Why do you require datasets for programmatic SEO?

All programmatic SEO projects require data points that you map to the page template and create 100s or even 1000s of pages at once. And datasets are the ones that contain those required data points.

For example, take a look at the diagram below that shows the working of programmatic SEO and where you need the dataset:

There are two ways to approach a programmatic SEO project, either you have the data, and then you think about the keywords to target, or you have the keywords, and then you are looking to collect relevant data.

And here I will be assuming that you know the keywords, and then you’re looking for the datasets.

Finding datasets for pSEO

Once you have finalized the keywords you’d be targeting, the required dataset can be found in the following 2 ways:

- Data is available on one webpage: In this case, someone (maybe the government or an individual) already has the data, and they have put everything on a page from where you can download it for free or by paying.

- Data is present on multiple web pages: If the data and data points you seek are present on multiple web pages on the internet, you need to take the help of data scraping techniques to collect data from multiple websites.

Now, let’s take a look at each of these scenarios:

1. Data is available on one webpage

It’s the easiest way to get the data for your pSEO projects, and you can get it for free, most of the time. You just have to find that one webpage where the data points you want are present, and then download the entire dataset in your preferred format.

But finding that one webpage is the key here. Here is how I approach it:

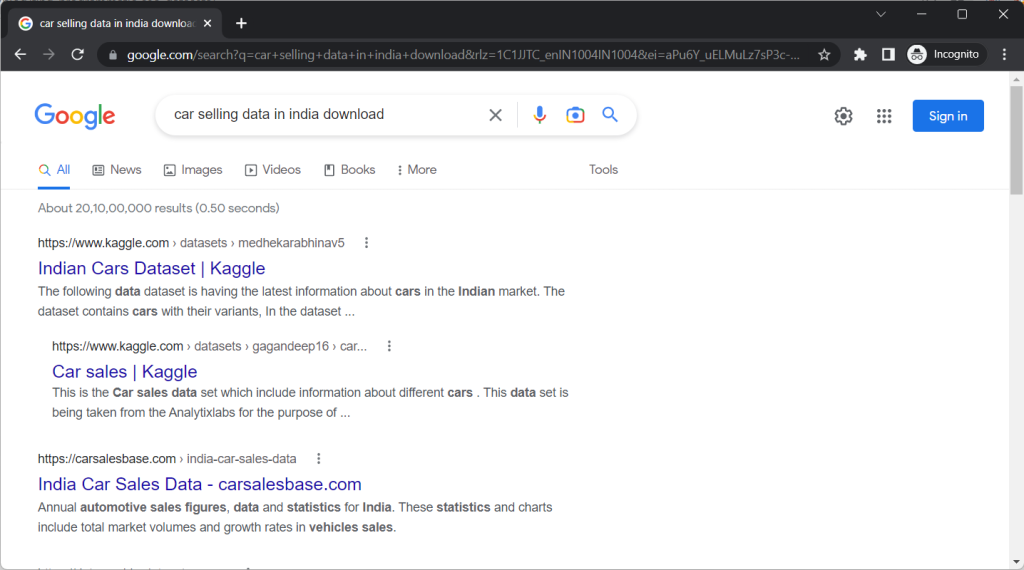

For example, if I am looking for Indian car-selling-related data then first I will go directly to Google, search car selling data in India download, and check the top 20 results. For common datasets, there are chances that you will get in the first few results what you’re looking for.

If I still don’t get it, I can start using filetype: and site: search operators. And then Google’s Dataset Search or maybe search government websites.

So, some possible ways to find these pre-available datasets are:

a. Take the help of Google

Google can be extremely useful for finding the datasets you need. Here are some ways you can leverage Google:

- Search directly for the dataset in Google – add the “download data” prefix or suffix to your keyword, and it will automatically show datasets from multiple sites.

- Use the

filetype:search operator – Google also indexes MS Excel files (.xls) in the search, and addingfiletype:xlsat the end of your search query can be helpful. - Use

site:search operator – This operator helps you search a specific website, which you can use to find public Google Sheets. Just addsite:docs.google.com/spreadsheetsat the end of your search, and you’ll only get Google Sheets as results.- You can also use the

site:operator to search Kaggle or other sites. Just addsite:kaggle.comand it will only show results from that site.

- You can also use the

- Use Google’s Dataset Search – This relatively newer tool from Google only shows datasets from multiple sites as results.

b. Search government sites and repositories

The governments of almost all countries have several websites with public data that you can leverage for your projects. Almost all the time, you can download the data for free.

For example, the US government has data.gov where there are more than 300k datasets from different categories available. Similarly, the Indian government has data.gov.in where more than 800k different datasets, as well as APIs, are available.

c. Raid Reddit

Reddit has some active communities where you can find datasets related to numerous distinct topics. Some cool Reddit communities are:

The best thing about these Reddit communities is that not only you can download the data that someone has already made available, but you can also request datasets related to your projects and someone might help you out.

d. Raid GitHub

GitHub has a lot of data present in numerous formats. And the following ways can help you extract what you are looking for.

- Search directly on GitHub – Go to GitHub.com and directly search for the data you’re looking for. For example, if I need data about car selling info, then I can search for “car selling data” or something like that.

- Use

site:github.comon Google – It’s even better if you use Google to search GitHub. Addsite:github.comat the end or start while googling, and it will only show results from GitHub. - Use

site:github.comalong withinurl:csv– If you’re specifically looking for datasets in the CSV format, usesite:github.com inurl:csvat the end or the start of your search terms on Google.

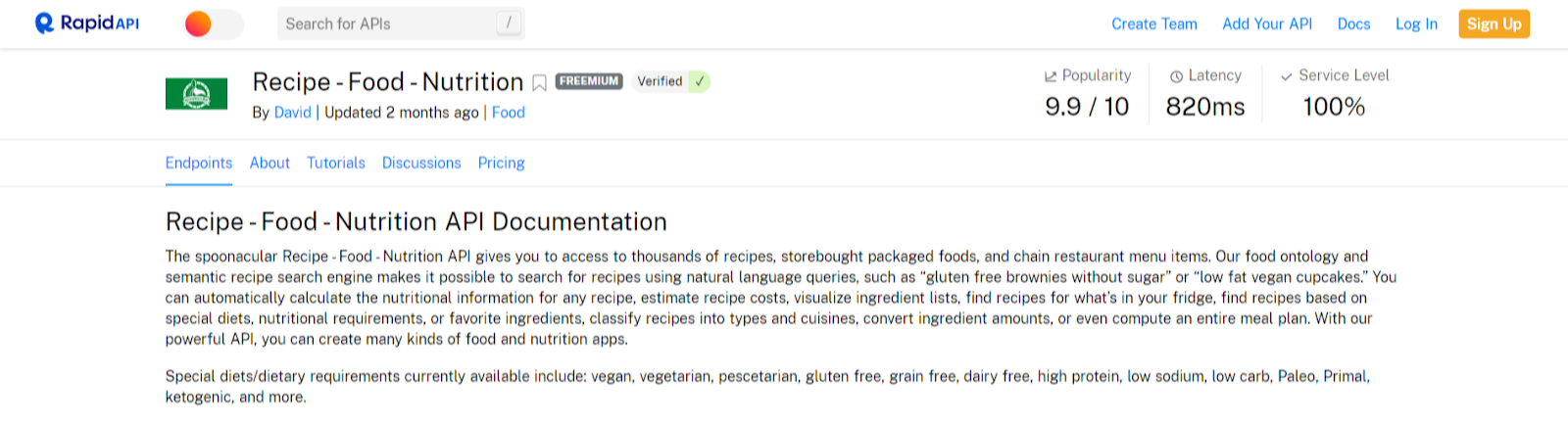

e. Public APIs

Data can’t just be in the CSV, XLS, MySQL, etc. format, it may also be present in the API format. And if you’re familiar with the workings of the API, you can use the API data to create your programmatic SEO site.

One of the biggest API sites is RapidAPI where you can find 1000s of APIs for your projects – some free, some paid.

For example, I found this interesting food nutrition-related API that can be used to create a remarkable programmatic site in the niche.

Apart from RapidAPI, here are some more API listing sites:

f. Search on dataset repositories/search engines

There are several dataset repository sites you can’t ignore. I have used datasets from these sites numerous times for my projects.

Let’s take a look at the list:

- Kaggle – Kaggle offers a wide variety of datasets on a diverse set of topics, including everything from financial data to satellite images.

- Awesome Public Datasets – It’s a collection of 100s of datasets in several categories, and it’s regularly updated by the community.

- Data World – data.world is a platform that provides access to a wide variety of datasets, as well as tools for collaboration, visualization, and analysis. It offers datasets on a diverse range of topics, including finance, sports, politics, and more.

- DataSN – Contains 1000s of properly cleaned datasets in different formats and categories.

- NASA EarthData – If you want datasets related to the earth, there can’t be anything better than NASA’s open earth data.

- World Bank Open Data – If you’re looking for GDP, finance, population, etc. related data for different countries, World Bank’s open data can be of huge help.

- Academic Torrents – Academic Torrents contains 100s of TBs of data, and you can find some massive datasets from here. For example, I found this image dataset of more than 200 birds.

Not to mention, most of the dataset repositories mentioned above are completely free to use.

2. Data is present on multiple web pages

If the data you want is present on multiple web pages of either the same site or different sites, data scraping is the way to collect those data.

Let me provide you with an example:

If I want to create an eCommerce comparison site where I compare the prices of the same product from multiple websites like Amazon, eBay, Walmart, Flipkart, etc. As it’s nearly impossible to extract the price data along with the data about other specifications of the product from multiple websites, I would want an automatic script that does this for me at regular intervals.

And that’s data scraping.

Generally, scraping the publicly available data is not illegal, but make sure to read the terms and conditions of the website you want to scrape.

Data scraping can be done in one of the following ways:

- By using no-code tools

- By using custom scripts

Let’s take a look at each of these.

a. By using no-code tools

If the data extraction is not too complicated, there are certain no-code tools that you can use for scraping. Here is a list of a few well-known tools:

- OctoParse

- ScrapingBee

- Zyte

- ParseHub, etc.

I have used OctoParse for my personal projects and it does wonders. When you enter the URL that you want to scrape from, generally, it automatically detects the repeated elements on the page as well as pagination, making it super easy to get started. The desktop version of OctoParse lets you scrape up to 10,000 rows of data under the free plan. And you can export the data in CSV, XLS, JSON, and even MySQL format.

b. By using custom scripts

If you’re familiar with writing custom scraper scripts, you can scrape as much data as you want without any limitations. Python libraries like Selenium, Scrapy, BeautifulSoup, Requests and lxml have ample documentation to get started with.

But… data scraping is a complicated as well as a time-consuming process – first, scrape the data, and then clean everything up to make it usable. So make sure you’re prepared for all that.

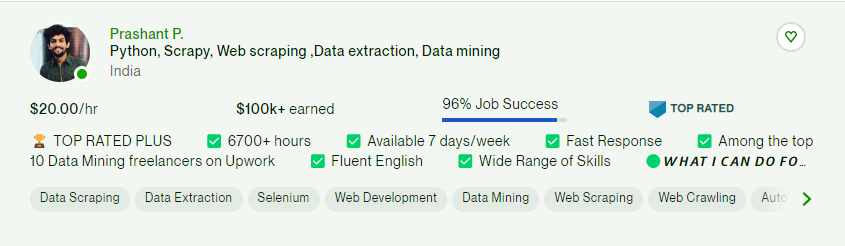

I recommend hiring an experienced freelance data scraper for these kinds of work. It requires significant effort away from your shoulder, and you can focus all your time and energy on other important aspects of programmatic SEO.

On freelance platforms like Upwork, you can find an experienced web scraper for as low as $20 per hour, as you see in the screenshots above.

Just be detailed and descriptive with your requirements so that there are no confusion and surprises at the end.

Read next: How to Scrape Data for Programmatic SEO

Bonus tip

I will end this post with a bonus tip.

You are not bound to use only one dataset per programmatic SEO project, you can combine multiple datasets as well. For example, if one dataset provides me with car names and the specifications of the car, I can find another dataset that has the yearly selling data of the same cars. And then combine them both to construct a unique dataset.

And now that you have a high-quality dataset, remember to create a high-quality page template to incorporate the dataset you have found.

If you have any related queries, feel free to let me know in the comments below.

Leave a Reply