Whether you directly found the dataset or scraped from multiple sources, you still need to “prepare” the dataset for your programmatic SEO project. Sometimes, you may need to modify a few data points and sometimes, add a few new data points.

In this short blog post, I will be listing out best practices for programmatic SEO data preparation. And also some interesting tips and tricks.

Let’s take a look…

Data preparation guidelines for pSEO

All the guidelines listed can be important for your pSEO projects, but they are in no specific order.

1. Create alternate versions of topics/headings

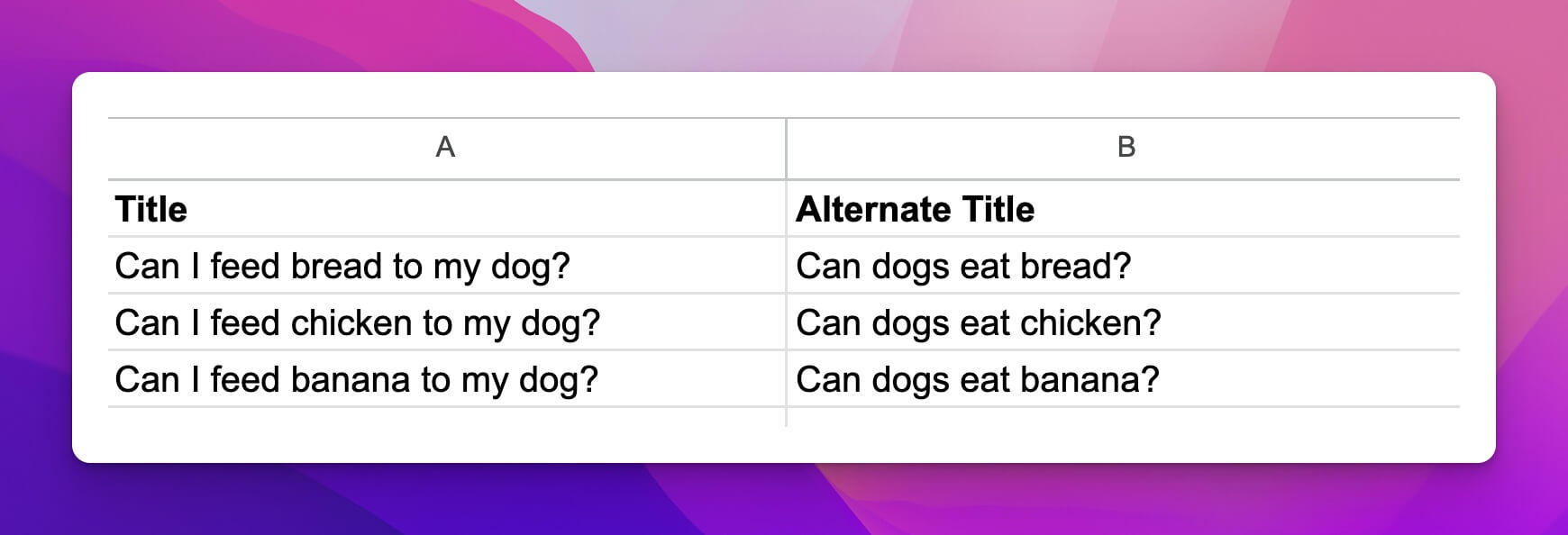

In your dataset, if possible, create a few alternate versions of topics or headings that can be used somewhere on the generated pages. You will be able to use different variations of the main keyword, and that will be helpful for SEO.

For example, if the main topic is can I feed bread to my dog the alternate version of this title can be can dogs eat bread as you can see in the screenshot above. This way, you will have greater chances of ranking higher for both of these keywords.

And not just one variation, if possible, you can create multiple as well. Just note them down in new columns.

2. Create lowercase versions of topics

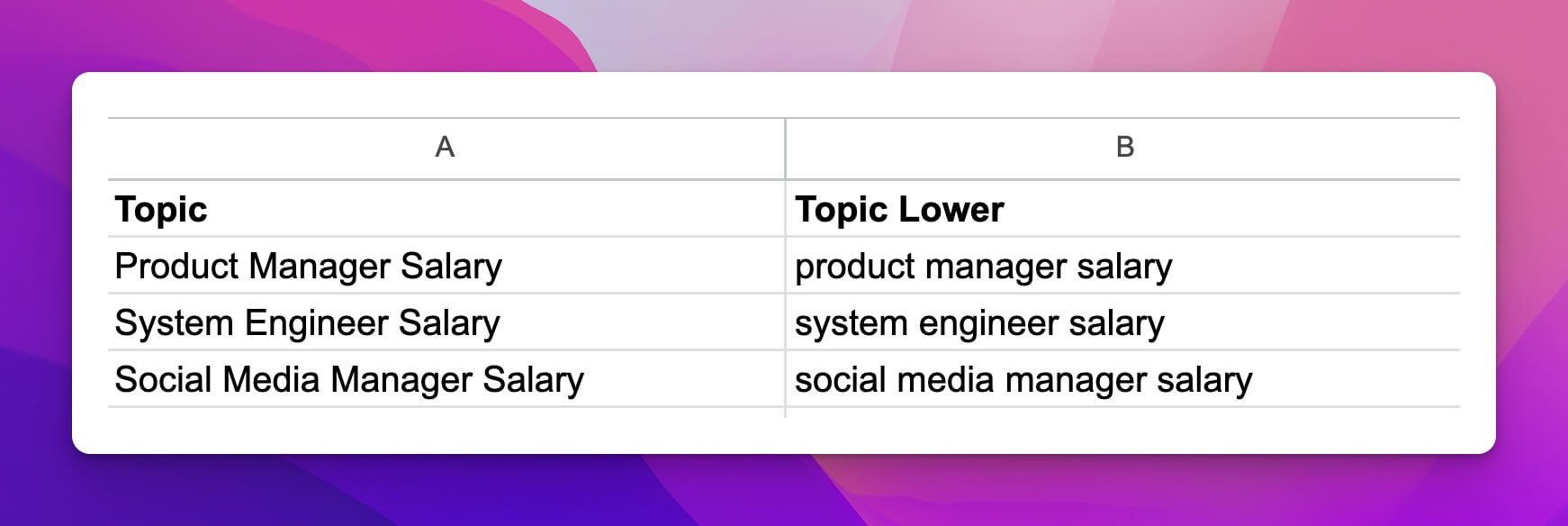

Generally, pSEO topics that you collect or prepare are in the title case format, but unnecessarily capitalised words inside sentences don’t look good. But you can fix this easily, by creating another data point that contains lowercase versions of topics.

For example, if I am creating programmatic pages about salaries for different posts, I can have lowercase versions of the topic as shown in the screenshot above. I just used the =LOWER() function in Google Sheets to do that.

Sometimes, when the topic contains a “name”, you will have to either manually lowercase the text apart from the “name”, or ask ChatGPT to provide you with a formula and instructions on how to do it.

Related: Different ways to use ChatGPT for pSEO

3. Create plural versions of topics

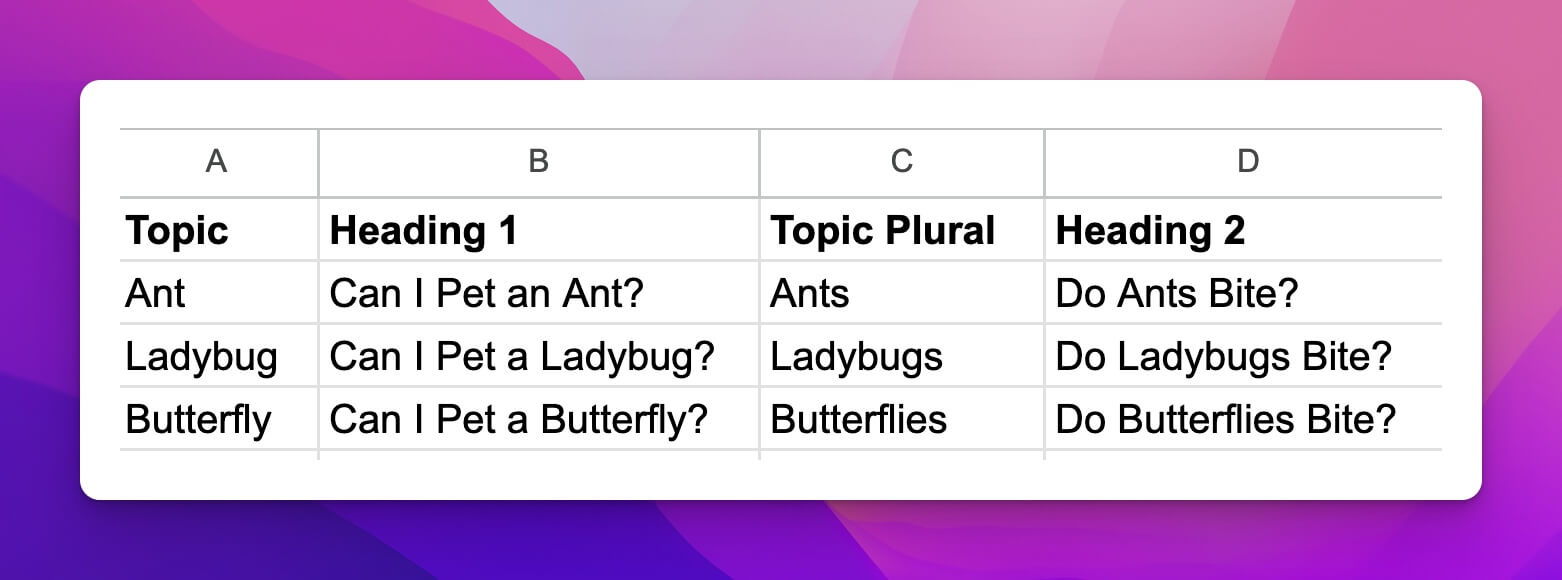

Just like the alternate and lowercase versions of topics, you should also have plural versions of topics that can be used if needed. It makes your programmatic posts feel more natural to read if it’s grammatically correct.

For example, in the above screenshot, see how two different topics are being used inside two different headings so that they’re grammatically correct.

You can either manually create the plural versions of each row of data, or utilise the OpenAI API. I have used the GPT-4 multiple times to create plural versions of topics — it’s very convenient. See how quickly you can generate plural versions of topics by using the API below:

For this, I just provide a simple 1-line prompt as “provide me with the plural version of the {topic}” and it works perfectly for me.

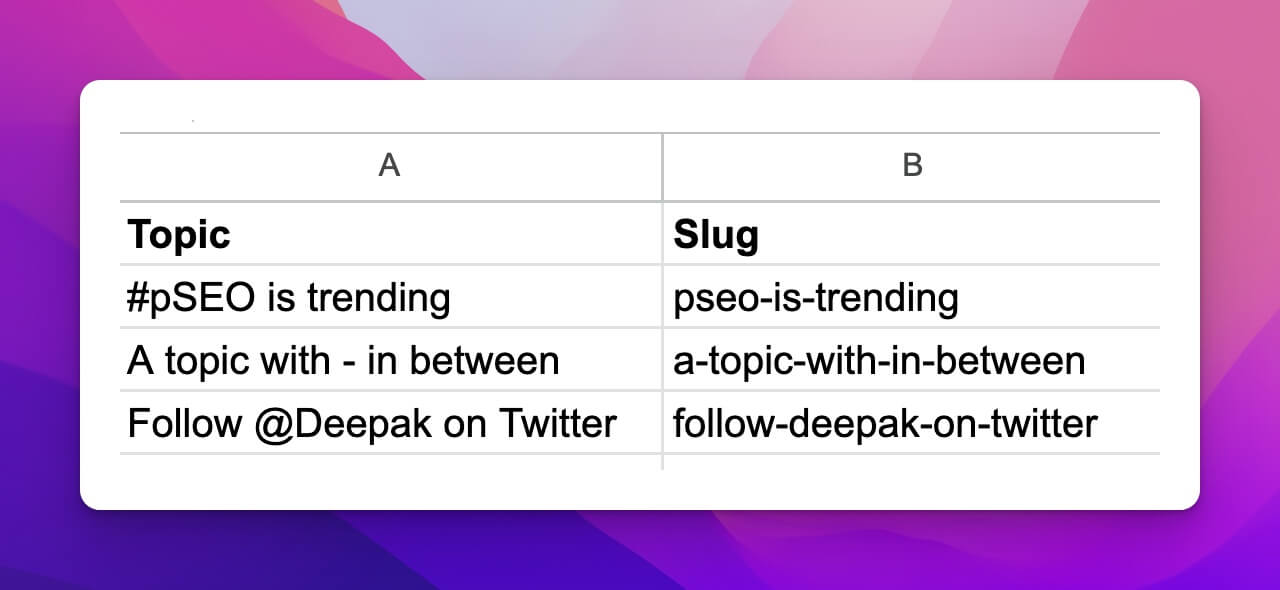

4. Have slugs created in the dataset

Creating slugs right inside your dataset is a better way to do programmatic SEO, as it gives you some additional control over the organisation of topics and internal linking.

I use the following Google Sheets formula to create slugs for topics, as shown in the above screenshot.

=LOWER(REGEXREPLACE(REGEXREPLACE(REGEXREPLACE(TRIM(A2),"[^a-zA-Z0-9]+","-"), "-{2,}", "-"), "^-+|-+$", ""))The formula takes care of everything, including special characters and emojis as well.

5. Short paragraphs

If you’re generating descriptions or introductions right inside your dataset by taking the help of AI, generally, the generated output contains huge paragraphs which are not very readable.

Do not keep more than 2-3 sentences in a paragraph and do not keep more than 35-40 words in a sentence.But if you add a simple instruction like the above to your prompt, the generated output is much better. If I am using GPT, I do this all the time.

6. Check for duplicate topics

When you are dealing with 100s of rows of data, it’s easy to mistakenly admit duplicates in your datasets. But even a single duplicate row can do a lot of damage as there will be 2 identical pages on your website when you connect the database to the page template.

If you’re using Google Sheets, then it’s straightforward to keep track of the duplicates. I use SORT, UNIQUE, COUNTA, and COUNTUNIQUE functions to detect duplicates, as shown in the above tweet.

Manually creating meta tags, especially meta descriptions, is cumbersome and repetitive. So it’s better if you use AI to generate all the required meta tags right inside your database. And depending upon your tech stack (WordPress, static site, etc.), you should be able to pass the meta details directly to the page template while connecting the database.

I specify in the prompt to keep the output under 150 characters for the meta description and 60 characters for the meta title, and GPT-4 gets it right most of the time.

8. Manually verify AI-generated data

No matter how detailed prompts you provide, it’s still very possible for AI to commit mistakes. And almost any AI model you’re using can commit mistakes.

So make sure, you are manually verifying each row before hitting publish. And this is necessary if you want your pSEO website to be trusted by people and to withstand the wrath of Google search updates.

Final words

If it helps in any way, you can also go through this video that shows how to prepare data for programmatic SEO projects.

The video mainly talks about how you can leverage AI for your programmatic SEO project while preparing the dataset.

That’s it.

If you have a related query, kindly feel free to let me know in the comments below.

Leave a Reply