Doing programmatic SEO is easy but doing it right and effectively is difficult.

The whole process is so technical and complicated that there are so many points of failure — from finding keywords, data collection, and creating the page template to internal linking and publishing.

In this post, I have explained some of the most common issues faced while doing programmatic SEO and what you can do to avoid them.

If you want to learn more about the subject, I have also written a detailed 4000-word programmatic SEO guide that you can refer to.

Common challenges associated with programmatic SEO

Programmatic SEO is not about creating more pages, it’s more about saving users’ time by directly providing them what they need.

Let’s take a look at the common problems, challenges, and points of failure that you must know, avoid, and overcome:

1. Slower crawl speed

If you’re implementing programmatic SEO, it means you’re creating 100s if not 1000s of pages. And, probably slower crawl speed is the most common issue in this regard.

In fact, I programmatically created around 1000 high-quality pages on a new website and after a month, only 350 got indexed on Google.

So is there anything that you can do to speed up the process? Turns out, there is…

- Go crazy about internal linking: Even if you’re programmatically generating the pages, create a system to effectively add internal links to all the pages. It not only distributes the link juice to all those pages but also helps search engines quickly discover the linked pages.

- Create more backlinks: Backlinks are another way for search engines to discover new pages quickly. The backlinks you have on the pages, the higher will be the chances of getting it indexed quickly. (Related: Check the latest backlinks and link building stats)

- Submit XML sitemap: Add your XML sitemap to the Google Search Console (submit your sitemap in Bing Webmaster Tool as well) and make sure it includes URLs that are indexable and yield 200 HTTP response codes. Another important thing is, that Google recommends including a maximum of 50,000 URLs in a sitemap so if you’ve more than 50,000 pages, make sure to split them into multiple sitemaps and submit all those sitemaps in Google Search Console.

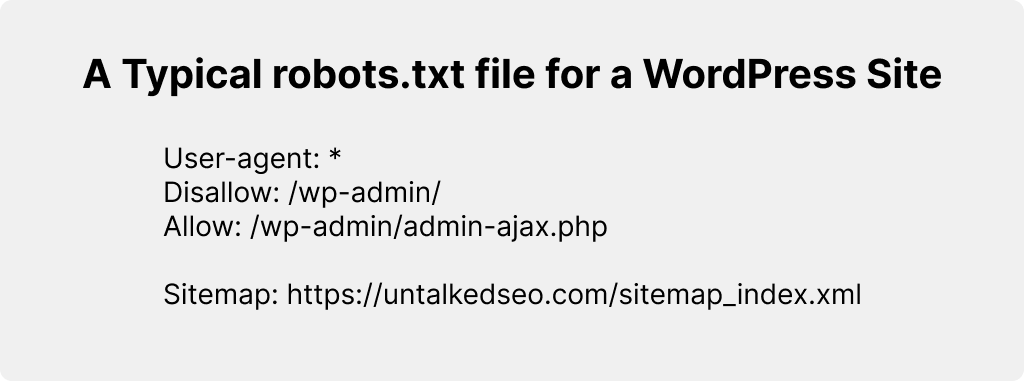

- Properly configured robots.txt: Set up your

robots.txtto allow or disallow specific web robots and also add all the XML sitemaps in yourrobots.txtfile. If you want a certain section of your website to be not indexed, then you can do it from here but it’s not guaranteed that the search engines won’t index those sections. You can see what a typicalrobots.txtfile looks like in the screenshot below:

Also, you can drip-publish your pages over the course of 5-6 weeks.

For example, if I have to publish 5,000 pages then first, I’d let Google index 10-20 pages and see if there are any indexing-related issues on those pages. And if everything seems alright, I would then increase my weekly publishing rate gradually.

Not sure if it really helps, but it’d surely look more natural to Google than dropping those 5,000 pages at once.

2. Duplicate content

Duplicate content describes the use of the same/similar text snippets on multiple pages of the site. And, not taking proper actions can cause various indexing-related problems on your website.

And there’s only one way to solve this—create high-quality pages with unique information on each page. Aim to have most of the page content unique.

I would suggest you do 2 things while setting up your programmatic SEO site to avoid the issue:

- Finalize only the keywords (head terms + modifiers) that are not very similar and closely related

- Pay extra attention while creating the page template and control the use of text snippets that will be repeated on every programmatically generated page

3. Low-quality or thin content

Not having enough content (thin content) or having low-quality content on the programmatically generated pages causes several indexing-related issues.

Your only goal should be to create pages that are super user-friendly and provide value to the users while keeping Google happy.

And there are a few things that you can do to overcome this challenge:

- Create sections that properly describe your product/service

- Add unique bullet points and descriptions of the sections

- Use more text instead of using infographics to show information

- Avoid using accordions or other hidden content because sometimes they don’t get read by Google

- Add descriptive captions and alt text to the images

- If possible add/embed a video on the page

And remember, you have to add more content on the pages without doing keyword stuffing.

Here’s a detailed guide on finding head keywords for doing programmatic SEO.

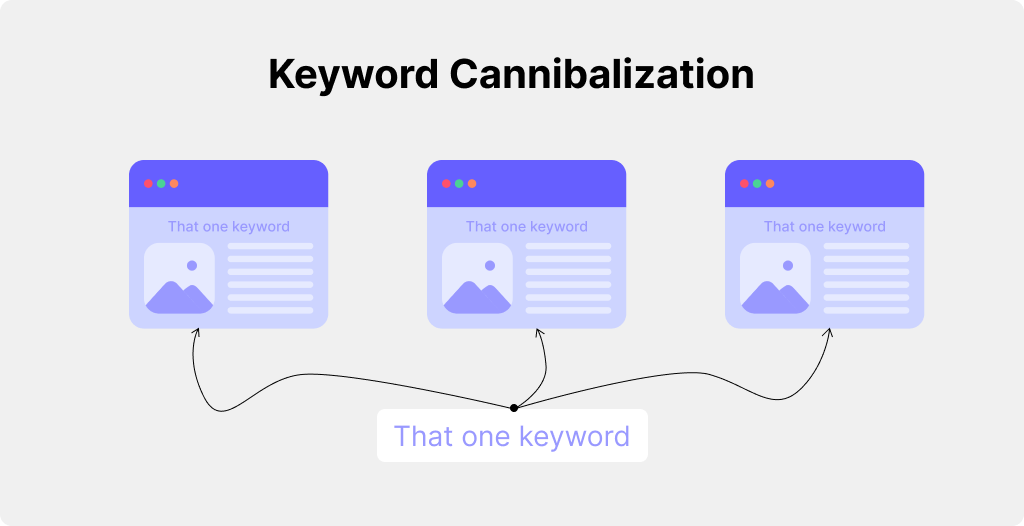

4. Keyword cannibalization

The issue of keyword cannibalization arises when you have several pages on your site targeting the same keyword. Due to this, the search engines get confused about which page to rank and end up ranking nothing.

Keyword cannibalization can be completely avoided by paying extra attention while doing keyword research and finalizing the target keywords including head terms and modifiers.

If two keywords are very similar and mean exactly the same thing, just choose the one which has more search volume. Or if possible in this programmatic SEO setting, use both (or more) keywords on the same page.

5. Low crawl budget

On the basis of how often you add new content and how big your site is, Google allots a crawl budget for your website. For newer sites, the crawl capacity limit is very low but for bigger sites, especially the news sites, the limit is very high and the new content quickly gets indexed.

Google decides the crawl budget for a site based on the number of pages on the site, update frequency, page quality, link authority, and several other things.

And some things that you can do to have your crawl budget increased and prevent wasting are:

- Eliminate all the 404 errors on the site

- Block URLs using robots.txt that do not need to be indexed

- Make your pages fast to load (use Google PageSpeed to check, or this Bulk Page Speed Test tool to check all pages at once)

- Make sure to have enough server capacity

- Keep your URLs clean, and avoid adding query parameters

- Don’t have a page depth of more than 3

- Keep an eye on the core web vitals in the GSC

- Avoid any kinds of long redirect chains, and

- Keep your sitemap updated

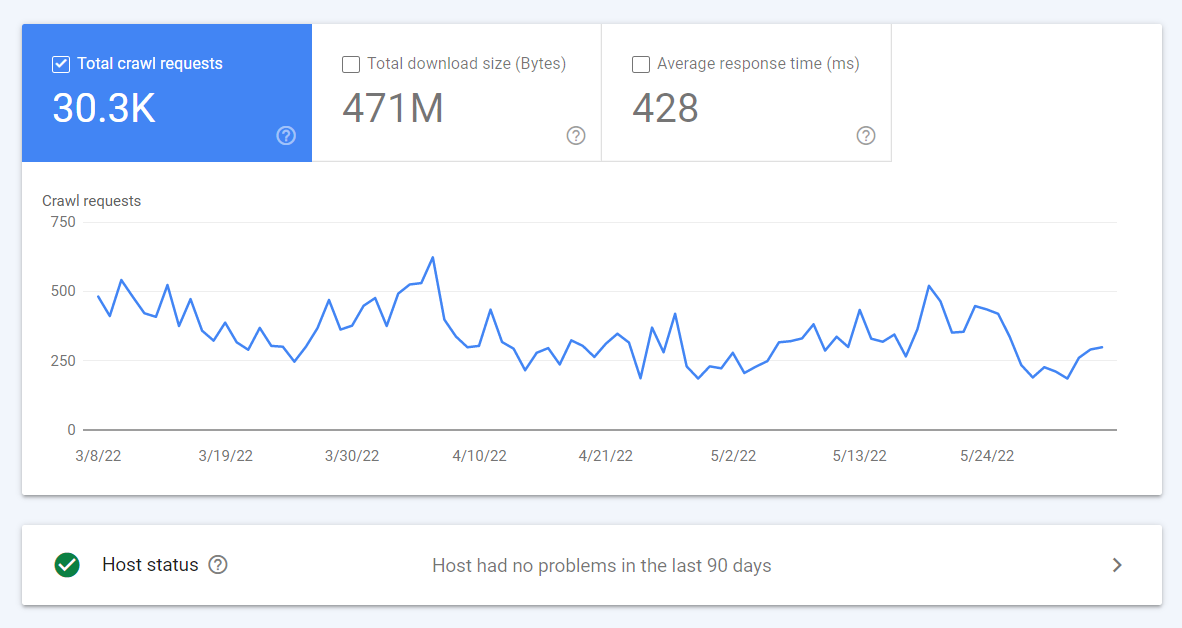

You can check the Google crawl stats report (see the above screenshot) in the Search Console and identify the problems that your site might be having. It’s very important for programmatic SEO sites as you deal with 1000s of pages.

Related: A Complete Programmatic SEO Guide

Final words

What is the main goal of doing programmatic SEO?

Your users will need to click several times before they land on what they are looking for, but by creating those programmatically generated pages targeting long-tail keywords, you’re helping the users find the wanted pages directly in the search.

First, think if the users really need those pages and if they need them, add information that would make the pages more user-friendly.

And there’s nothing else to do.

If you have a related query, feel free to quickly let me know in the comments below.

Leave a Reply