If you’re using AI for your programmatic SEO projects, you must be using APIs like OpenAI GPT and Google Gemini. And they cost a lot.

However, I finally found a way to run LLMs like llama2, mistral, llava, etc. locally on your computer and then generate outputs inside Google Sheets for multiple rows and columns. Yes, you will have to keep your computer running, but it doesn’t cost you direct money and is good for simple projects.

I have recorded a detailed video explaining everything step-by-step.

But if you prefer reading, please keep scrolling.

Local LLMs for pSEO Data Preparation

While this might not be the best way to do it, what I have done works perfectly. And if you understand programming, you can add further functionalities to further improve the workflow.

Now, let’s look at the step-by-step process…

1. Install Ollama on your computer

Ollama is an open-source software that lets you run large language models like llama2, mistral, and llava on your computer, with ease. It’s supported on macOS, Linux, and Windows as well. And to be able to use this perfectly, you need to have at least 8 GB of RAM (for 7B models).

First, you need to install Ollama by following the instructions on their official website. Once it’s properly installed, you need to run the following command to get started:

ollama run mistralI’m going with the mistral model, but you can choose your preferred model from the models library here and replace it in the above terminal command.

When you run the command for the very first time, it takes a longer time as it downloads the LLM which is usually a few GB.

Now that Ollama is installed, let’s move to the next step.

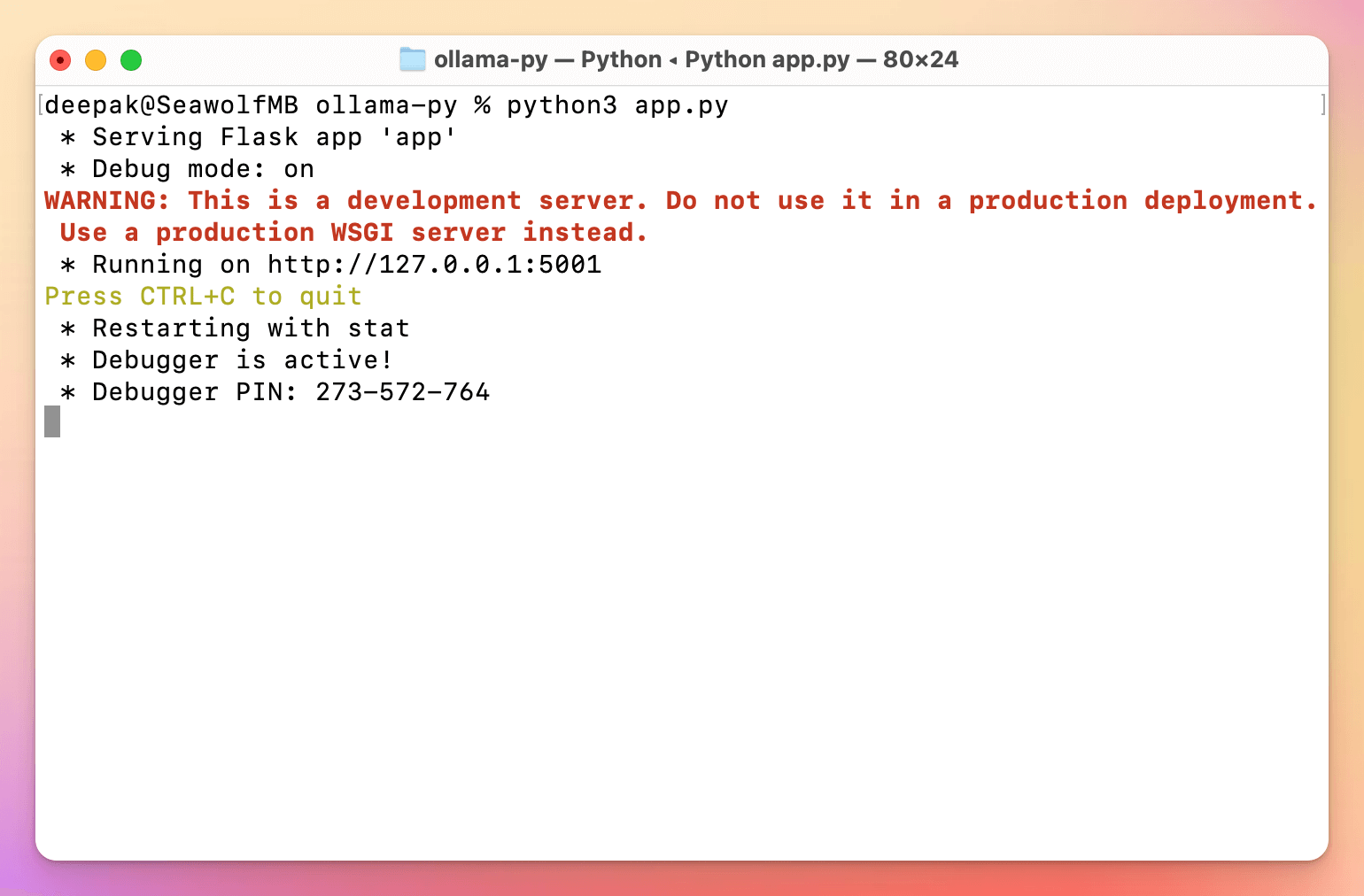

2. Create Web API using Flask

You need a Python script to create a web API using Flask. It sets up a simple web server that takes prompts through a specific endpoint and responds with generated output from Ollama.

For this, open any folder in your computer in a code editor like VS Code, create the app.py file, and copy-paste the following code into the file.

from flask import Flask, request, jsonify

import ollama

app = Flask(__name__)

@app.route('/api/chat', methods=['POST'])

def chat():

data = request.json

response = ollama.chat(model='mistral', messages=[{'role': 'user', 'content': data['content']}])

return jsonify(response['message']['content'])

if __name__ == '__main__':

app.run(debug=True, port=5001)After this, run the following command to start the Flask app:

python app.pyYou may need to use python3 here, depending on the Python installation on your computer.

And as soon as you run the command, the script keeps running on the PORT 5001, and now you need to use ngrok tunnel to make the localhost accessible online. Just make sure, you’re in the same folder in your terminal.

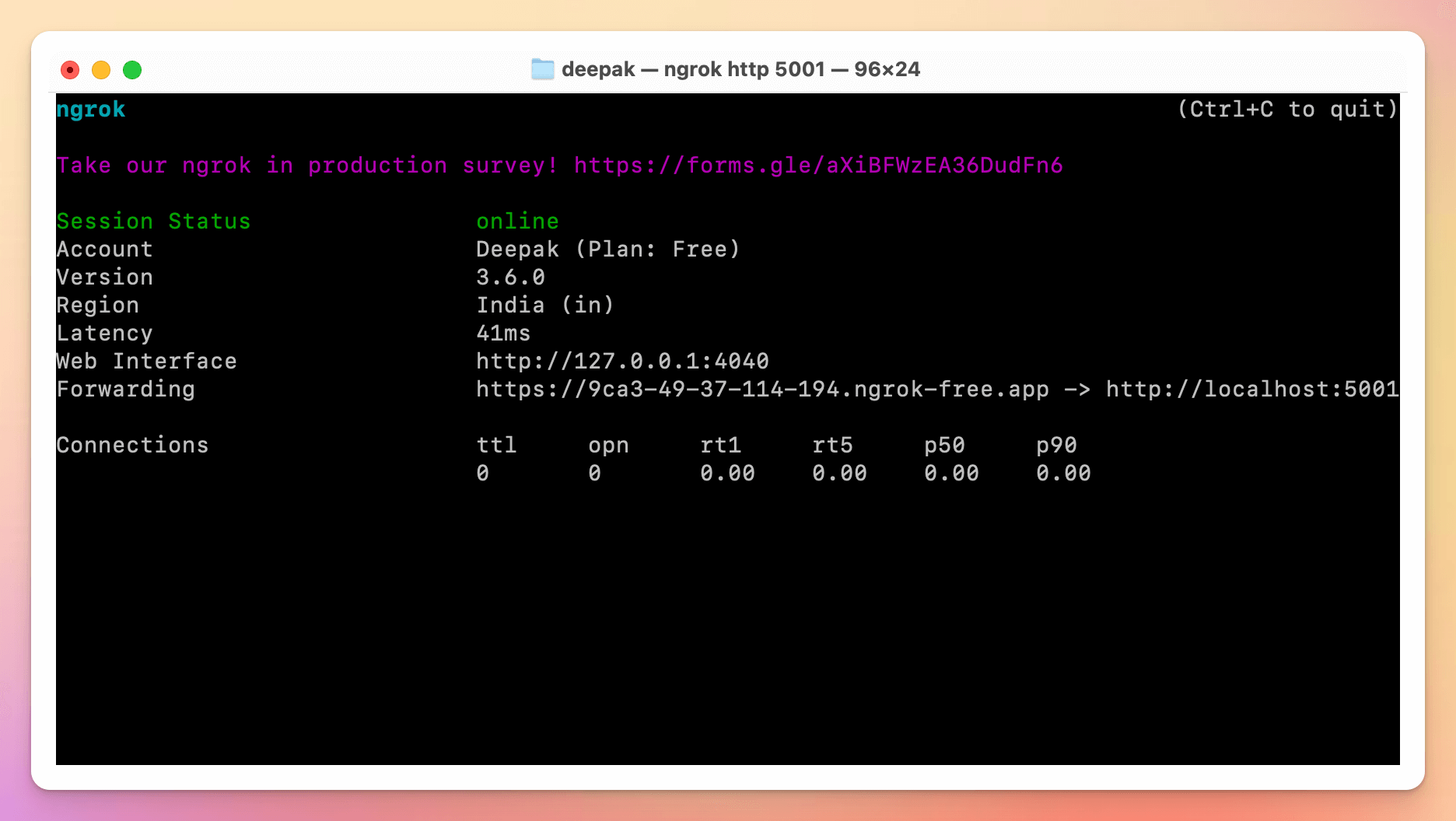

3. Set Up the ngrok Tunnel

For this, you will need to install ngrok on your computer and then create a free account to get the auth token command like below:

ngrok config add-authtoken <AUTH TOKEN>Once you run the command on your computer, ngrok gets added, and you’re ready to use it with the following command:

ngrok http 5001You’ll get a URL after running the command that you have to copy to your clipboard.

Now, let’s move to the next and final step.

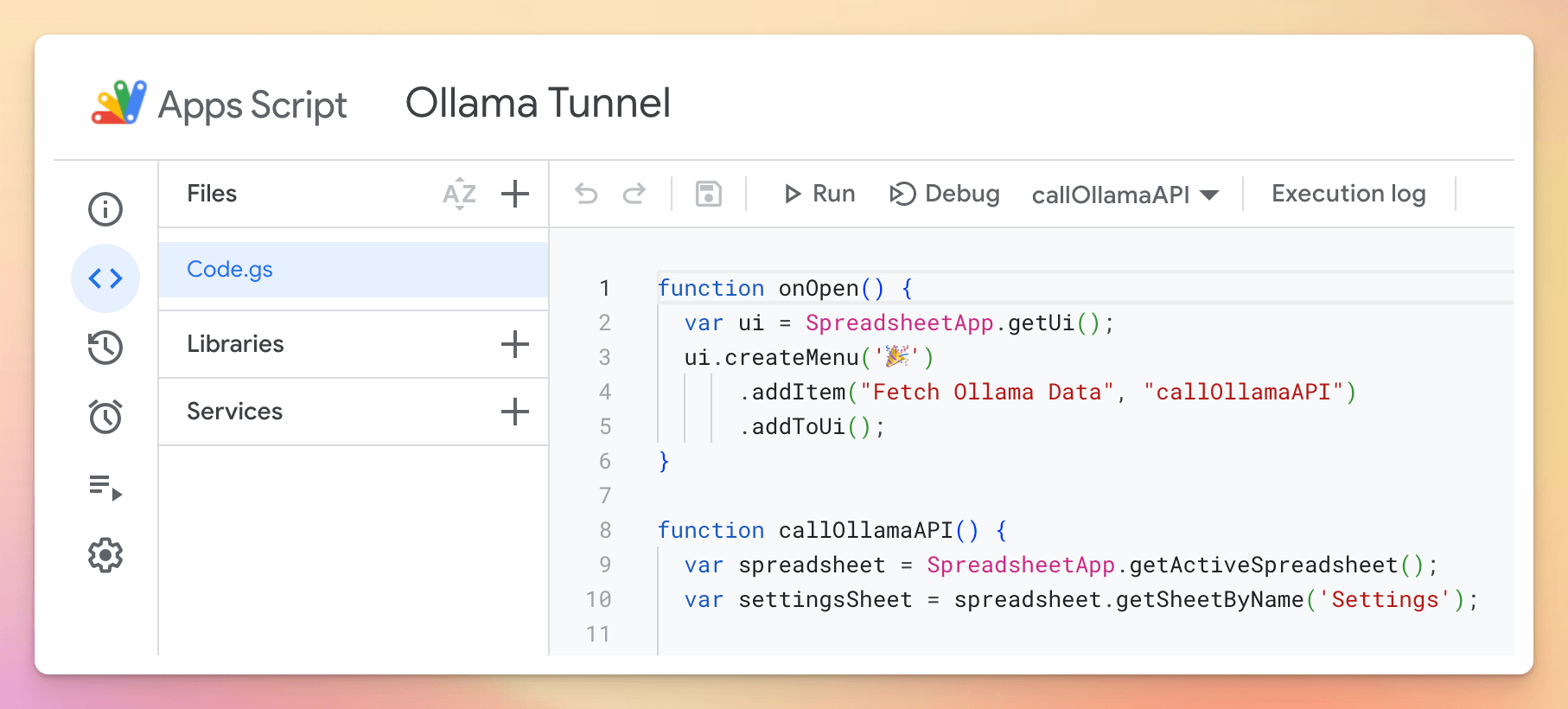

4. Set Up Google Sheets Apps Script

To call the API that we created, we’ll be using Apps Script inside our Google Sheets. But you’re in luck because you won’t have to write the code from scratch, I’ve already written the entire script below:

function onOpen() {

var ui = SpreadsheetApp.getUi();

ui.createMenu('🎉')

.addItem("Fetch Ollama Data", "callOllamaAPI")

.addToUi();

}

function callOllamaAPI() {

var spreadsheet = SpreadsheetApp.getActiveSpreadsheet();

var settingsSheet = spreadsheet.getSheetByName('Settings');

// Fetch the settings from Settings Sheet

var settingsRange = settingsSheet.getRange(2, 2, 5, 1);

var settingsValues = settingsRange.getValues();

var startRow = Number(settingsValues[0][0]);

var endRow = Number(settingsValues[1][0]);

var dataSheet = spreadsheet.getSheetByName(settingsValues[2][0]);

var promptColumns = settingsValues[3][0].split(',').map(function(item) { return letterToNum(item.trim()); });

var outputColumns = settingsValues[4][0].split(',').map(function(item) { return letterToNum(item.trim()); });

for (var i = startRow - 1; i < endRow; i++) {

for (var j = 0; j < promptColumns.length; j++) {

var promptCell = dataSheet.getRange(i + 1, promptColumns[j]);

var finalPrompt = promptCell.getValue();

if (!finalPrompt.trim()) {

continue;

}

var outputCell = dataSheet.getRange(i + 1, outputColumns[j]);

if (outputCell.getValue() === '') {

var ollamaData = {

content: finalPrompt

},

ollamaOptions = {

method: 'post',

contentType: 'application/json',

payload: JSON.stringify(ollamaData)

};

try {

var ollamaResponse = UrlFetchApp.fetch(`<TUNNEL>/api/chat`, ollamaOptions);

var ollamaTextResponse = ollamaResponse.getContentText();

// Remove the leading and trailing quotation marks from the JSON response and trim any leading/trailing whitespace

var ollamaOutput = ollamaTextResponse.slice(1, -1).trim();

// If there's a trailing quotation mark left, remove it

if (ollamaOutput.endsWith('"')) {

ollamaOutput = ollamaOutput.substring(0, ollamaOutput.length - 1);

}

// Replace \n with actual new line characters and \" with "

var formattedOutput = ollamaOutput.replace(/\\n/g, '\n').replace(/\\"/g, '"');

outputCell.setValue(formattedOutput);

} catch(e) {

console.error('Error calling Ollama API: ' + e.toString());

}

}

}

}

}

function letterToNum(letter) {

letter = letter.toUpperCase();

var column = 0, length = letter.length;

for (var i = 0; i < length; i++) {

column += (letter.charCodeAt(i) - 64) * Math.pow(26, length - i - 1);

}

return column;

}Just copy-paste the script, save with a proper name, select the callOllamaAPI function, and hit Run. When you run for the first time, you will be required to authenticate your Google account.

And now… you’re done.

Just like that, you will see outputs appearing inside the Google Sheets file. And all data is coming from the LLM that you’re running locally on your computer.

I have used this setup for some smaller and simpler programmatic SEO projects and it works perfectly. While these language models do not follow complex instructions, they are great for creating simple descriptions.

And of course, you can later use the Google Sheets for programmatic SEO in WordPress or using any other pSEO tools as well. If required, you can easily download the data in a CSV file as well.

Leave a Reply